Lexical Analyzer

|

|

The Role of the Lexical Analyzer

In the overwhelming majority of computer languages, a program's source code takes the form of a stream of text. While this form makes editing a program a simple matter with conventional tools, an unstructured (from the perspective of the compiler) series of characters is not readily parsed in a meaningful manner. It is the role of the lexical analyzer to divide up the character stream into an equivalent stream of tokens which can be more easily manipulated and parsed. This is the source of the term tokenizer as an alternate name for the lexer.

Tokens

A token is a classification of a character or group of characters as a unit that the parser can manipulate. The usual form of a token has two parts, a token type marker which describes the token, and a literal string which is the actual character sequence from the original character stream. In C, this can be implemented as an enumeration for the list of token types, and a struct type for the tokens themselves. To give a simple example of a language which has one-digit numbers, one letter variables, an addition operator, a multiplication operator, and left and right parentheses, you might define it as:

enum TokType {CHAR_LITERAL, INT_LITERAL, ADDOP, MULOP, LPAREN, RPAREN};

struct Token

{

TokType token_type;

char token;

};

Finite State Automata

A tokenizer can be written to directly as a case analysis (i.e., a switch statement or series of if-else if-else statements) that reads each character in the text, but early in the history of compiler design it was recognized that, for almost all practical programming languages, the source stream could be described in terms of a regular grammar, that is, a grammar that can be expressed in terms of a regular language. A regular language is a simple type of language consisting of a series of simple branching and repeating pathways that can be recognized by an abstraction called a Finite State Automaton.

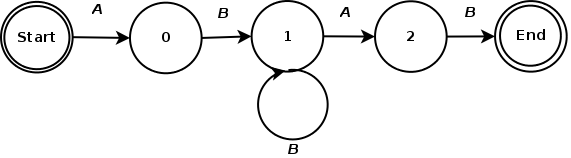

Finite state automata, or FSAs, are state machines in which there are a fixed number of possible transitions. In the case of a lexer, each state of the FSA except the start and end states matches a valid character that can appear in the sequence that yields a valid token. For example, if you have a language in which there can be an 'a', followed by any number of 'b's, followed by 'ab', then you could describe the language in terms of the following state diagram:

The trick now becomes applying this diagram form to a program. The simplest way is to write it as a case analysis, with each possible case testing whether the character is a valid lexeme in the language, and if it can appear in the position it shows up in. Each sub-case would be handles by a separate function.