User:Kmcguire/Quick And Dirty Virtual Address Space Scheme

The Quick And Dirty Virtual Address Space Scheme

This quick and dirty virtual address space scheme will take you step by step through a entire kernel sub-system that will provide the virtual address space for every user space process and the kernel while shadowing all page directories and tables by using direct mapping from physical into the virtual address space. All implementations are broken down into parts, and step by step we examine them together to try and provide not just a copy and paste bucket of code but instead a actual understanding.

Target Audience

If you have never read any of the Intel or AMD documentation for the 80386 and higher generation processors, comprehended a overview of the 80386 and higher virtual address space mechanism, or know the different in a virtual and physical address then you are most likely not a target for this book. Preferably someone who has attempted or successfully implemented a memory management scheme and would like to improve or continue it and needs a idea or some in depth explanation will benefit highly from this book. However you must realize that even if you are not a targeted audience this book could still help you, provide some valuable insight into you're future work, or allow to to realize if you are a targeted audience.

The Overview

I am going to try to lead you as gently as possible through two complete memory management schemes: physical and virtual. The first part of this document is about physical page management. The second part is about virtual page management. Both parts will be covered by explanation and implementation. The only part where you may could become confused is when we implement a limitation in our physical memory management.

I will try to lead you as gently as possible through two complete memory management schemes like I lead my own self when writing something. So not only should you gain a understanding of the contents, but the ability to understand how to break a solution into layers, especially for programming. You should know that this book is broken into two major parts: Physical Page Management, and Virtual Address Space Management. The physical page management will hopefully help you solve problems by giving a in depth and step by step examination of a basic allocator, while the virtual address space management is going to shed light on a scheme that is very successful at providing performance and less debugging.

Physical Page Management

This will provide some ground work, which is essential to the understanding of the virtual page management. We will discuss a few different approaches and generalize what a physical page management implementation should do. I will also use the byte map allocation technique since I feel it is very simple, and the memory requirements are simplistic at best.

Physical memory management is like having a lot, and I mean a lot, of places to put things and of course each of these places, boxes, cupboards, bins, or rooms that need to be kept track of. Imagine having a large warehouse with millions of boxes, and having to walk for thirty to sixty minutes just to reach a box in this building. First to take note of is that this would be literally insane. Second to also note is that the contents of each box is so similar to the other million boxes that just by looking inside one you could never deduce what it represents. This can be the equivalent of a physical memory, not the management since you have to walk in this warehouse to each box to check it. So literally no management exists, and upon that fact it is also just simply impossible to do with out management unless you liked living in a warehouse looking at boxes twenty four seven

Our management information will help us know things about the place, boxes, cupboards, bins, or rooms if you wish so that we can better find what we are looking for. Luckily the matter at hand in this book and for this chapter is just to keep track of weather something is actually in the box or not. I know the example above is strange in the sense but truly imagine searching through memory looking at the data contained on the page, and trying to determine if it is being used or is free to use just by the data contents. We could keep pages that can be used, which are unused by anything else, zeroed but the possibility arises that something may need to write all zeros thus the example should make sense that of these million boxes there is no way to determine what their contents are for when everything looks extremely similar.

We will provide some ground work here, but nothing overly complicated. A few nuts and bolts will be inserted towards the end, but overall do not expect anything that requires actually engaging the brain into overdrive. We really want to save that for later on. Included in the ground work is information and implementation for the byte map and the encapsulating function calls to work with this byte map by enslaving it to do you're evil bidding and take over the world. Ok. Well. I suppose that would be too much for it to handle, but it does allocate physical memory pages!

Discussing The Algorithm

There are many alternatives to the technique I will discuss in this section. You are welcomed to try new stuff, and use something even drastically different then what I use here. The only reason not to do something different is if it will keep you from understanding the sections here and forth.

A byte map consists of a sequential list of bytes, where each byte in this list - maps information to a page address. The page addresses we will be mapping to are the physical ones. Each byte holds a value that represents attributes, or just a attribute in our case, about that page. The byte index within the byte map is used to compute the actual page address.

Many algorithms exist that can provide us a allocation mechanism but some have very complex internals, little documentation, or other unnecessary functions and uses which would could cause bloating of the explanation by a exponential factor, decrease likely hood of comprehension, and turn away some people who are not trying to change the world with a memory management scheme. With these in mind I have decided to use the most basic design I could think of. Now this implementation is by no means the most efficient or the fastest but it does provide good all round performance and will keep the bugs down which means less debugging time. From my experience debugging time can make a project become abandoned, and with good reason.

A few algorithms are the Buddy Allocation System which is used by the Linux kernel, [Linked Lists], [AVL Trees], Map Allocators (which are used here in this book), and many more. You can find more references and links at the ending of this book.

The Byte Map Approach

The implementation of the allocator algorithm consists of three major parts, and a voodoo doll. No the doll is not included and it must be purchased separately to aid in some religious debugging rituals when everything fails to operate as intended but that should not happen. The initialization, allocation, and deallocation form the three major parts and are in reality very simple or can be extremely complex if you choose to do a different implementation later on so be aware.

The parts in our byte map allocator are very simple in understanding and implementing, and by implementing I mean the processing of putting some code into effect using a plan which you will find our as we move along. I thought I would save some time for the impatient and do both at the same time. For initialize we need to get things ready. Set default values, or use provided values to configure and optimize. Of course this allocator will do a little less than optimize it's self since I consider that a advanced and a not relevant topic for a quick and dirty virtual address space scheme. A allocation is when you set something apart or designate it for a special purpose. The reason we need to set something apart is to keep other independent parts of our system from meddling with what we what to use while we use it. Our allocation will be written in C and made callable as a function that will return a not designated or free physical page memory address, and right before this record it as being allocated or designated.The deallocation reverses the allocation procedure, and turns this designated or allocated physical page into a unallocated and not designated state. While I have just used the word state I would like you to know that in basic terms we are simply changing the state of a physical memory page.

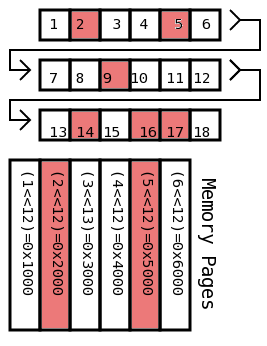

(Left) A depiction of a byte map and the memory pages associated with each byte. Also for worth noting the byte map could be a bit map which would use bits instead of bytes to represent the state of a page. The address coropsonding to the index in the byte map is used to produce the address in memory by shifting the address to the left by twelve. In the C language a shift operation is denoted by (index<<12) and thus such as in the depiction is used as text inside each page on the bottom. Also the color represents that byte in the map having a different value. This value is used to set if the byte is allocated or not. A byte can have two-hundred-fifty-six different values due to the eight bits being used to form a byte which range from zero to two-hundred-fifty-five. We only need two values for this simplistic design, but from a tutorial stand point bytes are easier to work with. Try creative uses for the byte map such as storing other information about the page such as: what it is allocated for, who allocated it, what permissions for allocation does it have, and many other possibilities.

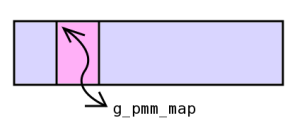

Implementation Global Data

We will need two pieces of information to be declared global. This will prevent us from having to constantly pass this variable to every function call, and indirectly decrease the chance of passing the wrong argument in it's place. It is like accidentally passing degrees to a function when it expects radians since you kept the conversion in the same variable. I hope you would not have much to keep in the same variables (below) but that is another story.

unsigned char *g_pmm_map = 0; unsigned int g_pmm_reqpages;

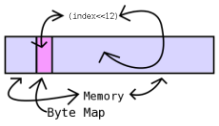

The Map Of Bits

The map of bits is pointed to by g_pmm_map, which also in turn treats this continuous area of memory as a array of eight bit bytes. As a quick run through we will use g_pmm_map to access and modify attributes for each page of memory we track. For example to get the attributes for a certain page in memory using any address we shift the address to the right twelve bits and use the result as a index into g_pmm_map. If you are not quite sure you understand why we make the bit shift to the right you need to do a search with [[1]] on google and then come back. It will not take long. I promise.

attributes = g_pmm_map[address>>12];

Of course you may notice that using this calculation we are considering our map starting at page address zero. This will be the default case through the remaining of this writing since: I want to keep the algorithm as simple as possible, and I need the implementation to handle the entire four gigabyte address space if requested.

Initialization

Lets first prototype the two initialization functions we will need, and I know I said there is only three parts with one of them being initialization. I just did not say how many items are in that section. Do not get discouraged this is a lot easier than you think just read on.

void pmm_setmaxmem(unsigned int max); void pmm_init(unsigned int page, unsigned int count);

You might know we needed a initialization function such as: pmm_init. But, the setmaxmem is most likely closer to throwing a monkey wrench in the works - do not worry we can work this out. The setmaxmem function will help configure the pmm to support the specified amount of memory. For example if we only need to support at maximum sixteen megabytes of memory, and instead we had no setmaxmem a default configuration would have to be used which might always initialize for four gigabytes instead. There would have to be a significant amount of wasted memory, and even though we like to waste memory at the beginning it does not make sense to go overboard and drown in clam waters.

Here we need to find out how much of the memory we are about to manage - we need ourselves to do the actual managing. It seems as silly as saying I want to buy something and part of what it costs should pay for it too. Although that sounds like it could confuse the nice store clerk for several seconds I imagine it would not work not because it is impossible since our system of currency would fail. Fortunately we are the money makers or rather the memory owners and we can do what we like which is simply using some of something for that something.

pageCount = (maxmem>>12)+1;

Using the above formula we can determine from the maximum amount of memory we wish to support that we will have ((maxmem>>12)+1) pages at most. This directly gives us the required bitmap length in bytes (or bits for a alternative implementation). Also we shift to the right once again. Since maxmem will be a thirty-two bit value that should represent the amount of memory detected in the system it indirectly holds the number of four kilobyte pages present too. I also add one in the case of a partial page which could be something like 0x1002 (four-thousand-ninety-eight) which is a page and one partial page. You could devise a more advanced mechanism here to detect a partial page and then add one if needed, but I decided to keep things simple.

Lets see if we can work out a simple implementation for this pmm_setmaxmem function. The function argument is the amount of memory detected in the system, or at least what we want to think it is.

void pmm_setmaxmem(unsigned int max){

g_pmm_reqpages = (max>>12)+1;

}

We just set a global variable to hold the result. All we wanted to do is provide a nice interface in the form of a function call to keep the distracting odd names and cryptic variables names out of the calling code.

Now, lets try to implement our pmm_init function in a layered manner or little steps to help you comprehend actually what is going on instead of a bag of jumbled code that looks more like someone someone never wanted you to read.

void pmm_init(unsigned int page, unsigned int count){

for(x = 0; x < count; ++x){

g_pmm_map[(page>>12)+x] = 0;

}

return;

}

We take the range and make sure all bytes for each page in the range are set to zeros to represent a unallocated state or free. We have a problem unfortunately and it lies in the fact that we still need to figure out where our map is in memory. We already know how big it needs to be so the simplest method would be to hope that the first memory range given can hold it. This inherently will introduce problems, but since I wanted a simple base to use to write our virtual memory management implementation I thought we could slip by here and if you like you can come up with something better. To understand how to use it properly see the end of this book in the examples section.

void pmm_init(unsigned int page, unsigned int count){

if(g_pmm_map == 0){

if(count >= g_pmm_reqpages){

g_pmm_map = (unsigned char*)page;

page += (count<<12);

count -= g_pmm_reqpages;

pmm_strip((unsigned int)g_pmm_map, g_pmm_reqpages);

}else{

return;

}

}

for(x = 0; x < count; ++x){

g_pmm_map[(page>>12)+x] = 0;

}

return;

}

Now, you will notice we see if the g_pmm_map does not exist then check if the first range passed is big enough. The reason we can check the existence of it is because its global declaration gives it a initialization value of zero or null. I include no error handling if the initial memory range, when g_pmm_map is zero, is not large enough since I feel the actual determination can be made outside of the pmm which is before the pmm_init call. There is also a unknown function included just ignore it until a little later but for a little information it is the same as using what something costs to pay for it's self.

Allocation

We will produce a prototype for the allocation function which will be responsible for returning a pointer to one page of memory.

unsigned int pmm_alloc(void);

The map of bits is pointed to by g_pmm_map, which also in treats this continuous area of memory as a array of eight bit bytes. As a quick run through we will use g_pmm_map to access and modify attributes for each page of memory we track. For example to get the attributes for a certain page in memory, using any address we take the address and shift it to the right twelve bits. If you are not quite sure you understand why we make the bit shift to the right you need to do a search with [[2]] on google and then come back. It will not take long. I promise.

attributes = g_pmm_map[address>>12];

Of course you may notice that I have already said this. I wanted to bring it up to the front of you brain so that when I say, "You can also start at array index zero and increment while you a less than g_pmm_reqpages." Lets add a layer that does just this: We need to walk through the bitmap, and we know that the first page at memory address zero has it's attributes stored at g_pmm_map[0] since I told you earlier that you may notice that using this calculation we are considering our map starting at page address zero. This will be the default case through the remaining of this writing since: I want to keep the algorithm as simple as possible, and I need the implementation to handle the entire four gigabyte address space if requested. So with that in mind we still have a problem or knowing at what array index to stop? Well the question can be answered by reviewing the pmm_setmax function and understanding that we can determine from the maximum amount of memory we wish to support that we will have ((maxmem>>12)+1) pages at most. This directly gives us the required bitmap length in bytes (or bits for a alternative implementation). So since we are walking the bytes in the map and we know that each byte represents one page of memory we should deduce that g_pmm_reqpages used in the pmm_setmax function holds the number of pages and bytes that our map tracks. Lets walk until we are right below that number since our array starts at index zero not one.

...

unsigned int x;

for(x = 0; x < g_pmm_reqpages; ++x){

// This g_pmm_map[x] is valid.

}

...

This is extremely useful for one major reason. We can now search the bitmap for pages that are unallocated since we know that there is a direct mapping between the array index and the page address with g_pmm_map[address>>12]. With this new found searching ability we should now be able to satisfy the request for one free page.

...

unsigned int x;

for(x = 0; x < g_pmm_reqpages; ++x){

if(g_pmm_map[x] == 0){

g_pmm_map[x] = 1;

return (x<<12);

}

}

...

You should notice that we shifted x left twelve bits. This is because the index is created from a address by shifting that address to the right twelve bits and the reverse will produce the whole address. Taking that address == ((address>>12)<<12). We simply got the index of a address with (address>>12) and turned it back into address using (address<<12). The only other switch is address becomes the index [] and then back.

Lets see if we can write out the entire implementation for the alloc function, and produce a completed pmm_alloc implementation with no bugs!

unsigned int pmm_alloc(void){

unsigned int x;

for(x = 0; x < g_pmm_reqpages; ++x){

if(g_pmm_map[x] == 0){

g_pmm_map[x] = 1;

return (x<<12);

}

}

}

Striping

When we strip we are talking about removing a entire physical address range from our allocator. A perfectly good example is where the kernel is loaded, and even where our g_pmm_map is located. If you remember we left a function call in our pmm_init function called pmm_strip and if you think about it long enough you might realize that strip is short for stripping just for brain exercise. Lets try implementing this here starting with our prototype.

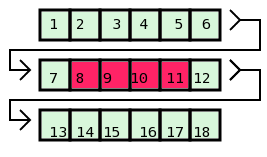

(Left) A depiction of green square representing byte in the map that are in a unallocated state, while the red show ones that have been set as allocated. A strip is exactly like a allocation except no address is returned. It just reserves the pages or removes them from ever being allocated by the allocator out of the byte map.

void pmm_strip(unsigned int page, unsigned int count){

unsigned int x;

for(x = 0; x < count; ++x){

g_pmm_map[(page>>12)+x] = 1;

}

return;

}

Its just a matter of running through the count in a loop, while adding the offset as page) shifted down twelve bits to it and setting a one there. Since nothing should ever allocate it. It should never be freed unless a bug crawls along and buries its self into your code. Ouch. And what good reason would you have for code overwriting the kernels .data and .text sections.

Deallocation

We have seen how to allocate a page by setting the appropriate byte in our map, and as you might have guess we deallocate it by setting this byte back to zero or what ever may be the deallocated value. Lets try our hands at writing it.

void pmm_free(unsigned int address){

g_pmm_map[address>>12] = 0;

}

Very simple. I know. Since there is a direct mapping between the array index and the page address we just exploit that fact by shifting the address to the right twelve bits, and setting the value to zero to represent a unallocated page.

We Need Limitations

We need limitations in order to make a slip fit with our virtual memory manager. Some of the careless allocation that we perform above just will not sit well with our virtual page management. I am going to avoid explaining exactly why, but as you read this section you may figure out why before I actually get there. These limitations are in the form of ranges. What we need to do is to break the contiguous memory we are working with into parts, or ranges. In our implementation we only need one range at the moment.

A range is a offset with a count. A range also needs to have a dynamic state during the initialization of our physical memory manager. So we should be able to set a range and leave it for good - in our early form at least! We are only going to use one range right now, and the form will be in a global variable which is accessible through a function call.

unsigned int g_pmm_vmm_offset; unsigned int g_pmm_vmm_count;

The functions, which need to be self explanatory.

void pmm_set_vmm_offset(unsigned int offset){

g_pmm_vmm_offset = offset;

return;

}

void pmm_set_vmm_count(unsigned int count){

g_pmm_vmm_count = count;

return;

}

A Limitation Implementation

I know the suspense is killing you, but wait the truth is here. We need to make our implementation understand that it should never allocate pages inside this range that we have given it with (g_pmm_vmm_offset) to (g_pmm_vmm_offset+g_pmm_vmm_count). If it does there will be lots of sparks if you use the virtual implementation coming up.

Before we get that far we need to find a way to keep our allocation from ever getting inside this range to even check the byte for a state. Lets take a look at our old culprit.

unsigned int pmm_alloc(void){

unsigned int x;

for(x = 0; x < g_pmm_reqpages; ++x){

if(g_pmm_map[x] == 0){

g_pmm_map[x] = 1;

return (x<<12);

}

}

return 0;

}

You will notice we just move through all the bytes until we find one that is a zero, and because the value (x) is a direct mapping to the page address we know what to return to the requester. This is where we need to insert a sanity check of: Is this inside our range?

.....

for(x = 0; x < g_pmm_reqpages; ++x){

if((x < g_pmm_vmm_offset) || (x >= (g_pmm_vmm_offset+g_pmm_vmm_count))){

if(g_pmm_map[x] == 0){

g_pmm_map[x] = 1;

return (x<<12);

}

}

}

.....

What this says is, "If x is less than (g_pmm_vmm_offset) then we have not made it to the range starting point yet.". It also states that, "If x is greater than or equal to (g_pmm_vmm_offset+g_pmm_vmm_count) then we are past the entire range". If either of these two conditions are true, hence the OR operator, then we should continue checking if this page is allocated or unallocated.

The final result will be a contiguous range of pages that are never allocated. The number of pages will be static for now to keep things simple.

unsigned int pmm_alloc(void){

for(x = 0; x < g_pmm_reqpages; ++x){

if((x < g_pmm_vmm_offset) || (x >= (g_pmm_vmm_offset+g_pmm_vmm_count))){

if(g_pmm_map[x] == 0){

g_pmm_map[x] = 1;

return (x<<12);

}

}

}

return 0;

}

Getting Creative

Getting creative is going to involve coming up with a better allocation and deallocation algorithm, and even better figuring out a method to dynamically change the g_pmm_vmm_* range above. But, just for the beginner lets try to focus on making a better allocator rather then a better limitation range until you read the next sections.

Virtual Page Management

This will provide the actual implementation and explanation on top of the previous physical page management ground work. If you have altered or changed the original one to suit needs you have to be sure you can do a nice slip and fit or at least read how I implemented the previous one.

The major disadvantage when working with page directories and tables when you are working in a virtual addressing mode is if the addresses in the directory and tables point to pages that are not directly mapped right into our current address, space since the processors internal mechanism only understands physical pages not virtual ones, it can cause a explosion of sparks or lots of data looking like scrambled eggs.

The obvious fix is to simply made correction to your calculations such as: performing a reverse lookup of the table to find where it is mapped in the current address space, searching a precomputed list of translations just for directories and tables, and others. The reasoning I have not to do either of the two later methods is if you have already mapped the pages into the current address space to even think about performing a reverse lookup then why not have just mapped them in with direct mapping such as mapping physical address 0x183000 to virtual address 0x183000 in the first place? This should save clock cycles from the calculations and just make working with page directories and tables much easier.

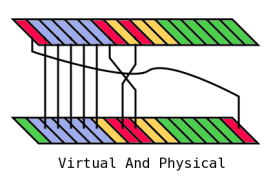

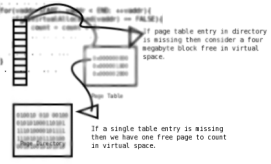

(Left) A depiction of the virtual and physical address spaces laid out in pages. Of course in the picture above the machine looks like it has less than twenty pages of memory, but it is only for a example. You will notice that are four colors: blue, red, orange, and green. The blue is the direct mapped page directories and tables area in memory, red is miscellaneous kernel allocated pages, orange repersents the actual kernel .text and .data sections and such other and also the IDT and GDT type tables which are also direct mapped just like the blue, and green which repersents free memory that can be used by user space processes and even the kernel (which would convert green to red for the kernel and I use no color in the depiction to represent user space allocated pages). You should also take into mind that in the top virtual color bar green is only found to the right. This is where the virtual memory has been split with kernel on the bottom and user space on the top. On the bottom color bar representing physical memory you can see that the green and red pages mix together some which allows the power of the 80386 paging mechanism. You will note too that the blue are required to be direct mapped and this forms the entire premise and maybe encompasses the entire ending part of this tutorial. How do we get blue direct mapped, spit the virtual address space, and let the physical address space mix.

Also we are going to split the virtual address space into two parts. For the sake of keeping things simple I am using the lower part of the four gigabyte address space for the kernel, while the remaining is used for the user space processes. If you do something different it should be trival to understand the modifications necessary.

Internal Allocation

Our scheme will use two allocation functions. One shall be used internally for allocation of directories and tables while the other will be our original pmm_alloc. We will try to produce a implementation of a internal one first, then work towards the second implementation or external if you will.

If you went through the section for the physical page management you will remember that we talked about limitations. We also implemented a range that the allocator was never supposed to touch. That range is where this internal vmm allocator will get pages from to use. The reasoning is we need to try to keep from clobbering up random pages in the upper memory which will be used by user space applications, and from clobbering all the available physical memory that has direct mapping potential.

Lets try writing a implementation that will provide internal allocations. We are going to try to use the three global variables from the physical memory manager. If you have written a different physical management scheme you will need to alter this entire function to use you're method.

unsigned int pmm_vmm_alloc(void){

for(x = g_pmm_vmm_offset; (x < (g_pmm_vmm_offset+g_pmm_vmm_count)) && (x < g_pmm_reqpages) ; ++x){

if(g_pmm_map[x] == 0){

g_pmm_map[x] = 1;

return (x<<12);

}

}

return 0;

}

If you notice we provide two conditions. The first says if x is less than our range's end and if x is less than the last page in our map then keep going. If not we need to exit with a zero or null.

Helpful Macros

Now that we have the start of our implementation written lets design two macros to assist when accessing our page directory and tables.

#define VMMDIR(t, x) ((unsigned int *)t[(x>>12)]) #define VMMTABLE(t, x) VMMDIR(t, x)[t>>22]

This allows use to specify VMMTABLE(vdir, address) with vdir being a unsigned int pointer to our virtual directory, and address being the virtual page address we wish to access inside the table. Of course there are a few holes which you will fall in such as: What if the directory exists but not that certain table? We will cover that next, and even find a example of this happening.

Finding Free Pages

Finding free pages is not all too hard. If you are concerned with performance you just might never finish the project, but for the most part keeping a level head and having forward progress is enough to understand this implementation since there is always time for optimization. The first part is our function prototype.

unsigned int vmm_findfree(unsigned int *vdir, unsigned int count, unsigned int index4mb, unsigned int icount4mb);

Here we want to pass a unsigned int pointer of our page directory, count as the number of continuous pages we need, index4mb as the four mega byte page address we may start our search at (pre shifted down twenty two bits), and icount4mb as the number of four megabyte pages starting at index4mb. This gives us flexibility to tell the function, "Hey, I want this many pages allocated in the kernel's half of memory." or "Hey, I want this many pages allocated in the user processes' half of memory.".

The second part is building a loop so we can move through each table entry of the directory, and if a table exists each of it's entries.

...

unsigned int x;

for(x = index4mb; x < (index4mb+icount4mb) ; ++x){

// check directory entry

if(vdir[x] != 0){

for(y = 0; y < 1024; ++y){

// check table entry

}

}

}

...

Now, this is great - but we are still missing a critical element. We must keep count of how many free pages we find. Lets try that.

...

unsigned int *table;

unsigned int x;

unsigned int cfreepage = 0;

for(x = index4mb; x < (index4mb+icount4mb) ; ++x){

if(vdir[x] != 0){

table = (unsigned int*)(vdir[x] & 0xFFFFF000);

for(y = 0; y < 1024; ++y){

if(table[y] != 0){

++cfreepage;

}

}

}

}

...

You will notice I create another unsigned int pointer to hold the table address just to simplify the code. I also used 0xFFFFF000 to zero the lower twelve bits of each directory entry since they only hold attributes for the page. I named cfreepage to represent the number of consecutive free pages which is flawed, of course!

Take note that the above implementation is wrong if we encounter a non-free page. This is because instead we should reset on encountering non-free pages. Also flawed is if a table does not exist we are completely ignoring it instead of counting one thousand and twenty four pages. It was holding all those pages and was not allocated right? Lets try a rewrite:

...

unsigned int *table;

unsigned int x;

unsigned int cfreepage = 0;

for(x = index4mb; x < (index4mb+icount4mb) ; ++x){

if(vdir[x] != 0){

table = (unsigned int*)(vdir[x] & 0xFFFFF000);

for(y = 0; y < 1024; ++y){

if(table[y] != 0){

++cfreepage;

}else{

// reset the count

cfreepage = 0;

}

}

}else{

// count completely blank tables

cfreepage += 1024;

}

}

...

A working directory and table walking function with the ability to find a consecutive range of free virtual pages will fit nicely in our next sections for a utility function. Lets get a complete version written real quick.

THIS FUNCTION IS BROKEN The concept will work but it was coded wrong!

unsigned int vmm_findfree(unsigned int *vdir, unsigned int count, unsigned int index4mb, unsigned int icount4mb){

unsigned int *table;

unsigned int x;

unsigned int cfreepage = 0;

unsigned int opage = index4mb << 22;

for(x = index4mb; x < (index4mb+icount4mb) ; ++x){

if(vdir[x] != 0){

table = (unsigned int*)(vdir[x] & 0xFFFFF000);

for(y = 0; y < 1024; ++y){

if(table[y] != 0){

++cfreepage;

if(cfreepage >= count){

return opage;

}

}else{

// reset the count

cfreepage = 0;

// this should end up being the first page notice the (y+1) and currently y=y+0.

opage = (x << 22) + ((y+1) << 12);

}

}

}else{

// count completely blank tables

cfreepage += 1024;

}

}

return 0;

}

I added the opage to keep track of the original page we start counting at. There are only two reasons we starting counting from zero. We reach a page that is current in use, or we have just called into the function. Note that opage equals index4mb << 22, which is the very first four megabyte page we start at (or table). Lets see about writing a mapping implementation.

Mapping

Mapping is extremely easy except for the now slightly complicated and dangerous corner case scenario we have created with the direct mapping, but.. it is worth it. Lets venture forward with creating our prototype for the first function.

void vmm_map_single(unsigned int *vdir, unsigned int phy, unsigned int virt, unsigned int flags){

VMMTABLE(vdir, virt) = (phy & 0xFFFFF000) | flags;

return;

}

Not bad huh, Surprised? You should be since using that would lead to a explosion of bits and bytes guaranteed. It completely ignores the fact that the table the virtual address resides in might not exists at all. Lets add some code to check for this and try to correct it.

....

unsigned int table;

if(VMMDIR(vdir, virt) == 0){

table = pmm_vmm_alloc();

VMMDIR(vdir, virt) = table | flags;

for(x = 0; x < 1024; ++x){

((unsigned int*)VMMDIR(vdir, virt))[x] = 0;

}

}

VMMTABLE(vdir, virt) = (phy & 0xFFFFF000) | flags;

....

I first check if the table exists. If not I create it and then proceed to clearing it by converting the unsigned int value into a pointer and looping through all one thousand and twenty four entries. Then we set the appropriate entry with the macro VMMTABLE. We have still forgotten one important element to the entire process. That is to keep a direct mapping of the directories and tables. Lets try once again to get this right!

....

unsigned int table;

if(VMMDIR(vdir, virt) == 0){

table = pmm_vmm_alloc();

VMMDIR(vdir, virt) = table | flags;

// mapping table

vmm_map_single(vdir, table, table, flags);

for(x = 0; x < 1024; ++x){

if(

((table >> 22 << 10) == (virt >> 22 << 10)) &&

((table << 10 >> 10 >> 12) == (x))

){

// skip it

}else{

((unsigned int*)VMMDIR(vdir, virt))[x] = 0;

}

}

}

VMMTABLE(vdir, virt) = (phy & 0xFFFFF000) | flags;

....

Wait. What is this? A recursive call it must be, and you are right. We need to keep a direct mapping, and for those of you not quite up to date this should do the trick in example. The table can now be referenced in both physical and virtual address modes. You will also notice some mess of shift operators. I am first saying if table is going to need to be mapped into the same table as virt and the current (x) references the same entry we are currently on then skip it. This is a corner case scenario that I had to learn the hard way.. ouch. Afterwards, we set the table entrie with the macro VMMTABLE like usual or so we thought from the get go.

There does exist a case in which every pmm_vmm_alloc call could return a address that resides on a different table. This could cause a severe performance hit if it happens, but the likely hood is low and the all around saying could be that you would have had to allocate some more tables anyway so why not do it early on? [humor]

Lets try to tie this together into one complete function implementation.

void vmm_map_single(unsigned int *vdir, unsigned int phy, unsigned int virt, unsigned int flags){

unsigned int table;

if(VMMDIR(vdir, virt) == 0){

table = pmm_vmm_alloc();

VMMDIR(vdir, virt) = table | flags;

// mapping table

vmm_map_single(vdir, table, table, flags);

for(x = 0; x < 1024; ++x){

if(

((table >> 22 << 10) == (virt >> 22 << 10)) &&

((table << 10 >> 10 >> 12) == (x))

){

// skip it

}else{

((unsigned int*)VMMDIR(vdir, virt))[x] = 0;

}

}

}

VMMTABLE(vdir, virt) = (phy & 0xFFFFF000) | flags;

}

Allocation

Allocation is a rather simple process but does involve one pitfall. This, is inherently, that we need to know what the pass the findfree function so we can keep kernel and user processes' virtual memory separated. You will find out when we write our initialization function why this is so. Lets get started with our prototype.

unsigned int vmm_alloc(unsigned int *vdir, unsigned int vindex4mb, unsigned int vcount4mb, unsigned int flags);

The vdir contains a unsigned int pointer to our page directory, vindex4mb is a four megabyte index or table index, vcount4mb is the count of four mega byte blocks we wish to allocate with in starting at vindex4mb, and flags are the attributes we wish to assign this allocation. Lets see if we can write a some code to acquire a free page and then allocate it.

... unsigned int virtpage = vmm_findfree(vdir, 1, vindex4mb, vcount4mb); unsigned int phypage = pmm_alloc(); vmm_map_single(vdir, phypage, virtpage, flags); return virtpage; ...

You should notice we first search for a free virtual page spot with count one. Next, we get a free physical page from our physical memory management function we wrote a while back. Finally, we actually map the physical page to the virtual page, and return the virtual address contained in virtpage. You might have noticed that we used a vindex4mb and vcount4mb. In our next two functions you will see why.

Kernel And User Allocations

In basic terms we need two separate ranges to be used and called by different functions to provide a kernel space and a user process space. You will understand why when we arrive at implementing our initialization function. Until then lets define our prototypes.

unsigned int vmm_ualloc(void); unsigned int vmm_kalloc(void);

And, the implementation which uses two constants that split our kernel and user space into two (two gigabyte) regions.

unsigned int vmm_ualloc(unsigned int *vdir, unsigned int flags){

return vmm_alloc(vdir, 0x1FFFFFFF>>22, (0xFFFFFFFF>>22) - 1, flags);

}

unsigned int vmm_kalloc(unsigned int *vdir, unsigned int flags){

return vmm_alloc(vdir, 0x0, (0x1FFFFFFF>>22) - 1, flags);

}

This is a point in which you may wish to change these constants, or convert them into a more complex system that could suit you better. For development they should be ok.

Directory Creation

Here is the king of all function implementations. This one ties them all together, and even attaches a little ribbon and teleports you to another dimension... Well not quite that much but it does tie everything together! This time we need to define a little more than just a function prototype.

Data Structures

struct tvmmDir{

unsigned int *vdir;

struct tvmmDir *next;

};

This is our linked list item, and will be used to track all page directories in use by the kernel.

Global Data

struct tvmmDir *g_vmm_dir = 0;

I want you to use this global variable to keep a hook to the first entrie in the link list at all times.

Implementation

And, of course our actual implementation.

unsigned int* vmm_create(unsigned int *vdir, unsigned int flags);

A little trickier here so pay attention. The vdir is the current virtual address space we are executing in, it could be null or zero as I will explain later. The flags argument are normally going to be the default kernel attributes you wish to specify. I left it as a argument in case you were debugging and wish to write a quick user space application to access kernel memory (including the page directory page). Lets dive a little deeper, by exploring the code to create this initial page to be used as a directory.

...

unsigned int *dir = (unsigned int*)pmm_vmm_alloc();

// add new dir to global dir link list

nl = (struct tvmmDir*)<YOUR MALLOC IMPLEMENTATION>;

nl->vdir = dir;

if(g_vmm_dir == 0){

nl->next = 0;

g_vmm_dir = nl;

}else{

nl->next = g_vmm_dir;

g_vmm_dir = nl->next;

}

....

Here we allocate a page from a special function pmm_vmm_alloc that returns a physical page address from that speacil range we created earlier. If you implemented your own then it should also return a page that can be directly mapped. Next, we either start the link list or add a new item to it keeping the next pointers updated correctly. Lets see about doing direct mapping on this directory page so it can be accessed in the current address space.

...

struct tvmmDir *nl;

unsigned int *dir = (unsigned int*)pmm_vmm_alloc();

// add new dir to global dir link list

nl = (struct tvmmDir*)<YOUR MALLOC IMPLEMENTATION>;

nl->vmm = dir;

if(g_vmm_dir == 0){

nl->next = 0;

g_vmm_dir = nl;

}else{

nl->next = g_vmm_dir;

g_vmm_dir = nl->next;

}

// map into current virtual address space for accessing

vmm_map_single(vdir, (unsigned int)dir, (unsigned int)dir, flags);

// clear entire directory

for(x = 0; x < 1024; ++x){

dir[x] = 0;

}

....

We first map it so the processor will not generate a page fault exception, then we clear each entrie being one thousand and twenty four of them. Now, lets create a sync implementation so the changes are commited across all page directories.

Sync Implementation

Lets during the middle of implementing our intialization function go ahead and create a propagation implementation. What happens is we store a individual page directory for each process, but we shared the bottom two gigabytes with each process using tables. Yet, sometimes like in this instance we need to modify another process' page directory and tables so the solution becomes to simply keep every directory and table always mapped into the kernel's virtual space. To do this we must also make sure we keep the other directories syncronized.

void vmm_sync(unsigned int *vdir);

We pass our current virtual page directory from the calling vmm_create function we are currently working on.

void vmm_sync(unsigned int *vdir){

struct tvmmDir *cl;

for(cl = g_vmm_dir; cl != 0; cl = cl->next){

if(cl != vdir){

for(x = 0; x < ((0x1FFFFFFF>>22) - 1); ++x){

cl->vdir[x] = vdir[x];

}

}

}

return;

}

Since the current address space is could be considered our working copy, and it should contain the same as every other directory at least when we first started execution in this address space. All we should do is update all others from this one to reflect any changes made. You will almost notice that annoying constant repersenting the kernel only using the lower two gigabytes of the entire four gigabyte address space. Remember, we do not want to copy a different processes tables onto another as that would create wierd events..can you imagine?

Create (Continued)

Here is where we left from.

...

struct tvmmDir *nl;

unsigned int *dir = (unsigned int*)pmm_vmm_alloc();

// add new dir to global dir link list

nl = (struct tvmmDir*)<YOUR MALLOC IMPLEMENTATION>;

nl->vmm = dir;

if(g_vmm_dir == 0){

nl->next = 0;

g_vmm_dir = nl;

}else{

nl->next = g_vmm_dir;

g_vmm_dir = nl->next;

}

// map into current virtual address space for accessing

vmm_map_single(vdir, (unsigned int)dir, (unsigned int)dir, flags);

// clear entire directory

for(x = 0; x < 1024; ++x){

dir[x] = 0;

}

....

Now, that we have implemented a syncronization function lets use it!

unsigned int* vmm_create(unsigned int *vdir, unsigned int flags){

struct tvmmDir *nl;

unsigned int *dir = (unsigned int*)pmm_vmm_alloc();

// add new dir to global dir link list

nl = (struct tvmmDir*)<YOUR MALLOC IMPLEMENTATION>;

nl->vmm = dir;

if(g_vmm_dir == 0){

nl->next = 0;

g_vmm_dir = nl;

}else{

nl->next = g_vmm_dir;

g_vmm_dir = nl->next;

}

// map into current virtual address space for accessing

vmm_map_single(vdir, (unsigned int)dir, (unsigned int)dir, flags);

// clear entire directory

for(x = 0; x < 1024; ++x){

dir[x] = 0;

}

// syncronize

vmm_sync(vdir);

return dir;

}

Now, we have a complete vmm_create function but we are missing something. The ability to cope if vdir is null. Lets make some changes.

unsigned int* vmm_create(unsigned int *vdir, unsigned int flags){

struct tvmmDir *nl;

unsigned int *dir = (unsigned int*)pmm_vmm_alloc();

// add new dir to global dir link list

nl = (struct tvmmDir*)<YOUR MALLOC IMPLEMENTATION>;

nl->vmm = dir;

if(g_vmm_dir == 0){

nl->next = 0;

g_vmm_dir = nl;

}else{

nl->next = g_vmm_dir;

g_vmm_dir = nl->next;

}

if(vdir != 0){

// map into current virtual address space for accessing

vmm_map_single(vdir, (unsigned int)dir, (unsigned int)dir, flags);

// clear entire directory

for(x = 0; x < 1024; ++x){

dir[x] = 0;

}

// syncronize

vmm_sync(vdir);

}else{

for(x = 0; x < 1024; ++x){

dir[x] = 0;

}

vmm_map_single(dir, (unsigned int)dir, (unsigned int)dir, flags);

// no syncronization since this is the first ever page directory.

}

return dir;

}

Now, you will notice we still add a item to the link list, but we branch and just clear and make a mapping to it's self.

Finishing Up

To finsh up lets try to run down a example scenario with some code. For starters lets say the kernel just booted up, and you need to get the memory managment going. Here is what I would round about do.

pmm_setmaxmem(AQUIRE_MAXMEM_INSTALLED);

pmm_init(FIRST_CALL_WITH_BIGGEST_FREE_MEMORY_RANGE);

for(;;){

// LOOK THROUH ANY OTHER MEMORY RANGES

pmm_init(....);

}

// STIP THE KERNEL'S LOADED ADDRESS OUT.

pmm_strip(KERNEL_START, KERNEL_PAGECOUNT);

cr3 = vmm_create(0);

vmm_map_range(cr3, KERNEL_START, KERNEL_START, KERNEL_PAGECOUNT, VMM_KERNEL);

vmm_map_range(cr3, 0xb8000, 0xb8000, 1, VMM_KERNEL);

vmm_map_range(cr3, GDT_AND_IDT_START, GDT_AND_IDT_START, GDT_AND_IDT_PAGECOUNT, VMM_KERNEL);

// TURN ON PAGING

// ................................

// ... create processes virtual memory ...

childcr3 = vmm_create(cr3);

// call vmm functions with childcr3... get childcr3 ready..

// syncronize all other page directories..

vmm_sync(cr3);

// jump to child thread..

// ..............................

Extras

Here are some extras I have.

#define VMM_SUPER 0x4 #define VMM_RW 0x2 #define VMM_PRESENT 0x1 #define VMM_KERNEL (VMM_SUPER|VMM_RW|VMM_PRESENT)

Flags for using with the flag argument for the vmm_ function calls.

Feedback

You can provide feedback at <[email protected]>