VFS

| Filesystems |

|---|

| Virtual Filesystems |

| Disk Filesystems |

| CD/DVD Filesystems |

| Network Filesystems |

| Flash Filesystems |

Introduction

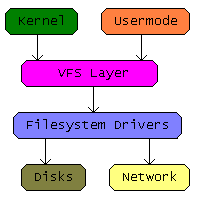

A Virtual File System (VFS) is not an on-disk file system, or a network file system. As such, it's neither a data structure (like ReiserFS, NTFS or FAT), nor a network protocol (like NFS). Actually, it's just an abstraction that many operating systems provide to applications, so don't let the name scare you.

Virtual file systems are used to separate the high-level interface to the file system from the low level interfaces that different implementations (FAT, ext3, etc) may require, thus providing transparent access to storage devices from applications. This allows for greater flexibility, specially if one wants to support several file systems.

The VFS sits between the higher level user space operations over the file system, and the file system drivers. Having a VFS interface implies having the idea of mount points. A mount point is a path inside the virtual file system tree that represents an in-use file system. This file system may be on a local device, in memory or stored on a networked device.

Disambiguation of the underlying semantics of a VFS

A VFS, in concrete terms then, provides a uniform access path and subsystem for a group of file systems of the same type. To date, there are three common types of file system: Hierarchical (the most common), tag-based and Database file systems.

VFS Models

Indexed (*-DOS/NT)

The VFS model used in *-DOS and Windows NT assigns a letter from the alphabet to each accessible file system on the machine. This type of VFS is the most simple to implement but is restricted to 26 mounted file systems and can get more and more complex as features are added, so you might want to use numbers instead. When a file is requested the VFS checks what drive the file is on and then passes the request on to the relevant driver.

Mount Point List

A more complex model is that of a mount point list. This system maintains a list of mounted file systems and where they are mounted. When a file is requested the list is scanned to determine what file system the file is on. The rest of the path is then passed on to the file system driver to fetch the file. This design is a quite versatile one but suffers from speed problems when large amounts of mount points are used.

Node Graph (Unix)

A VFS model that can be very efficient is the Node Graph. This model maintains a graph of file system nodes that can represent a file, folder, mount point or other type of file. A node graph can be faster to traverse than a list but suffers from complexity problems and, if a large amounts of nodes are needed, can take up large amounts of memory.

Each node in a node graph has the name, permissions and inode stored within a structure along with pointers to file IO functions like Read, Write, Read Dir and Find Dir.

Compromise

These models represent the basics for a VFS to be designed on, they have their problems however. Scanning through a list of mount points and then passing on to the file system the remainder of the path is usable for a simple OS but requires large amounts of repeated code as each driver must be able to parse a path reliably. A node graph on the other hand, requires a node for each file and directory on the system to present in memory at the same time, otherwise features like mount points would have to be constantly refreshed.

A compromise between these two systems would be to have a list of mounted file systems and use that to determine what mount point a file lies on and then use nodes that do not necessarily have to permanently reside in memory to store file information and methods.

File Abstraction

One of the most important part of an OS is to create the file abstraction, that is, hide the underlying file system structure and sector layout from the application. This is usually implemented by open, read, write and close system calls (stands for both POSIX and Windows).

Opening a file

This function is responsible for finding or creating a context (VFS inode or vnode) for the given filename. This usually means to iterate on the aforementioned VFS nodes in memory, and if file is not found, consult the mount list (see above), the file system driver and the storage driver to get the required metadata (in POSIX terminology, fstat struct). The open system call returns an identifier that connects the opened file with that context. This identifier can be globally unique (like FCB), or local to the process (an integer, typical in UNIX-like systems). The pointed context should have information like

- storage device where the opened file resides

- structure describing which sectors belong to this file on the storage (or a simple inode reference)

- file size

- file position (seek offset)

- file access mode (read / write)

It is advisable to create another abstraction layer here and divide the context into two. One structure describing all the information from the FS point of view (vnode), and another structure for the application's point of view (open file struct, only referencing the vnode). The advantage here is, that you can keep a counter of number of open streams in the vnode, which you'll need later. Remember, more process can open the same file for reading, therefore you cannot put file position in the vnode directly. Whether you allocate this open file list in the process' address space or in the VFS, is up to you. But vnodes must be stored in a central place in VFS, otherwise you won't be able to avoid race-conditions and implement proper file locking.

Reading or writing

Applications know nothing, all they can see is a linear, contigous data stream. This means that the heavy duty of translation falls to the VFS layer. This is a very complex task, and notoriously hard to get it right for the first time. A typical read should do the following steps:

1. Using the file position and the file size information in the context, it must determine how many sectors needed (depends on FS logical sector size). It's possible that file position is greater or equal of the file size, therefore the answer is zero. In that case VFS must provide a way to indicate EOF condition. If file position and size is greater than file size, then the size argument must be lowered. Note that file position may not be on sector boundary, and even a read of 2 bytes can spread across multiple sectors.

2. Using the file storage information in the context, VFS must create a list of sector addresses for the device. This can be done in a uniform (FS independent) way, although file systems tends to use very different allocation algorithms (FAT clusters, inode indirect tree, extents etc), therefore most VFS implementation delegates this task to the file system driver. Don't forget that file systems use logical sectors.

3. The list of logical sectors must be converted to a list of physical sectors. This usually means to add the starting sector of the partition the file system is on, but could also mean more or less items in the list if logical sector size and physical sector size differ. In short, the VFS (knowing the characteristics of the underlying storage device) must convert LSN list into LBA list.

4. Now that the list of physical sectors is known, the VFS can use readsector calls in the appropriate disk driver. The storage device is stored in the context (providing the device parameter for the call), and the kernel's device tree should tell which driver to use.

5. Once the sectors are in memory, the VFS needs to copy their contents into the reader's buffer. If file position is not multiple of the sector size, then the first sector will start with extra data VFS must skip. If file position plus read size is not multiple of sector size, then the last sector has extra data that VFS must discard.

6. The VFS must do housekeeping in the context. This typically means adjusting the file position and things alike.

7. The VFS may now notify the reader (in POSIX that means to wake up the blocked process).

Write has similar steps, but there the VFS must allocate logical sectors (or clusters, stripes, etc. whatever the file system is using for allocation) by calling the file system driver. With writes, the VFS should read the first and the last sector at a minimum, because they may have extra data, see step 5 above.

Closing a file

Finally, the close function is responsible for saving any modifications to the file into the storage (in case of writing) and updating the file system metadata. This usually means a call into the file system driver. Finally, free the memory allocated for the open file list entry. If you have a separate vnode + open file list (you should), then the VFS must decrease the vnode's open streams counter, and if that's zero, also free the vnode.

See Also

External Links

- 2001 Linux VFS article part 1 and part 2 on FreeOS

- 1996 Linux VFS article in the Linux Kernel Hackers' Guide

- The Use of Name Spaces in Plan 9 - Describes how Plan 9 takes advantage of both private namespaces and an VFS that matches the 9P distributed file system protocol.

- 1986 "Vnodes: An Architecture for Multiple File System Types in Sun UNIX" (Kleiman) [1] describes a common approach to VFS